Research Areas

Prof. YANG’s work focuses on prognostic health monitoring and robotics technologies for intelligent safety monitoring in smart cities. Fundamental research studies data-driven condition monitoring of electromechanical equipment in the Internet of Things environment with a focus on multimodal signals processing, intelligent diagnosis, and resilience dynamic monitoring. Critical research on robotics includes machine vision-based perception, 3D shape recognition, and agile robot control for safety monitoring applications.

Research areas include:

- Intelligent Fault Diagnosis and Prognostic Health Management of engineering system

- Safe Service Guarantee of distributed heterogeneous urban equipment

- Machine Learninhg based robot perception and control for safety monitoring

Intelligent Fault Diagnosis and Prognostic Health Management

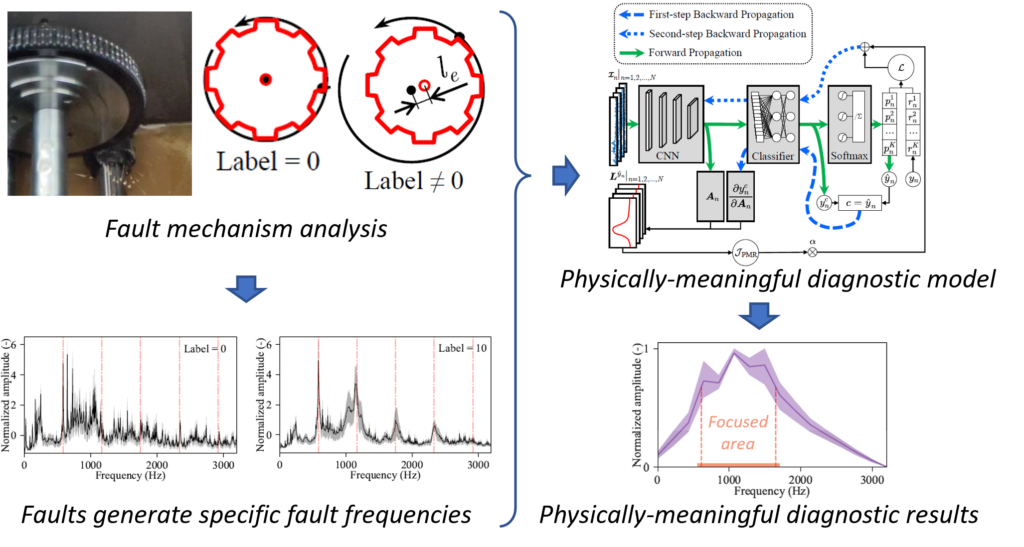

Physically-meaningful Intelligent Fault Diagnosis

The physically meaningful diagnostic model is proposed by using gradient-weighted class activation mapping (Grad-CAM) to guide the model in focusing on the same frequency bands of the input spectra and ignoring other parts of noisy and irrelevant signals. This model not only reveals the focused area of the vibration spectra to help users understand the decision-making process, but also possesses a better anti-noise ability.

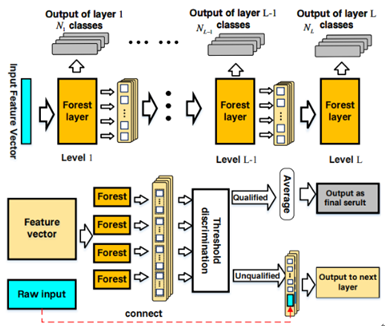

Hierarchical output cascade random forest integrated learning model for fault classification

Our group utilize machine learning-based nonlinear noise reduction and dimensionality reduction methods and “wavelet filtering + empirical mode decomposition” to extract features from signals, and for the first time proposes the use of hierarchical output cascade stochastic forest integrated learning model. This work has been published in the journal ISA Transaction. The generalized framework of this method has been applied to the fault monitoring and early warning system of the tunnel ventilators of the Hong Kong-Zhuhai-Macao Bridge.

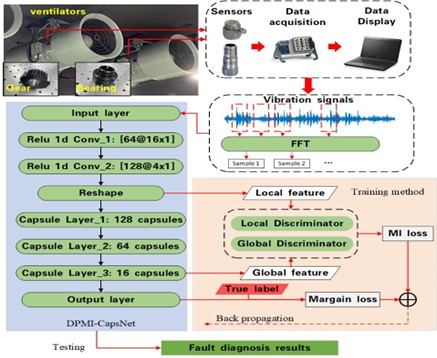

Fault diagnosis of rotating machinery based on rapid capsule network

Our group propose a novel and fast capsule network-based approach to realize the diagnosis of complex faults in rotating machines. This method introduces dynamic pruning and dynamic routing modules to improve the training efficiency of the network. By introducing consistency constraints on the same layer of capsules, the consistency evaluation index of the dynamic routing algorithm is improved to avoid the homogeneity of capsule layers. In addition, a composite loss function consisting of supervised loss and unsupervised loss is proposed. Unsupervised loss is combined with multi-scale mutual information to increase the reconciled mean of local and full domain mutual information to improve the accuracy of fault identification.

Machine Learning based robot perception and control for safety monitoring

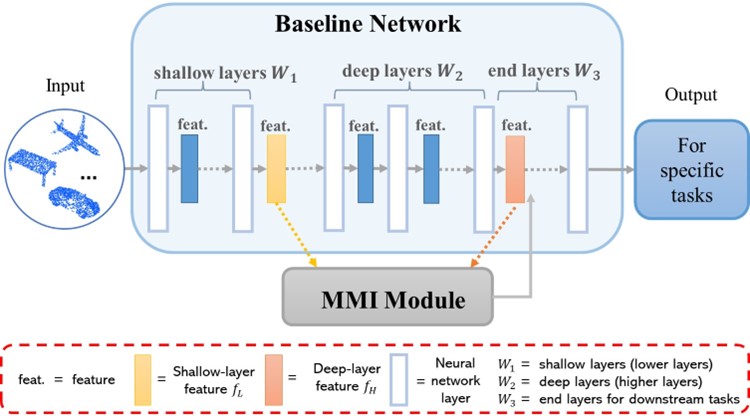

Point cloud complementation based on mutual information maximization

Focusing on the machine vision perception problem of poor point cloud recognition accuracy, sparse point clouds and incomplete point clouds, we propose the point cloud learning enhancement method based on maximizing the cross-layer feature mutual information. Our approach achieves performance improvements for most generalized point cloud learning networks without additional data enhancements.

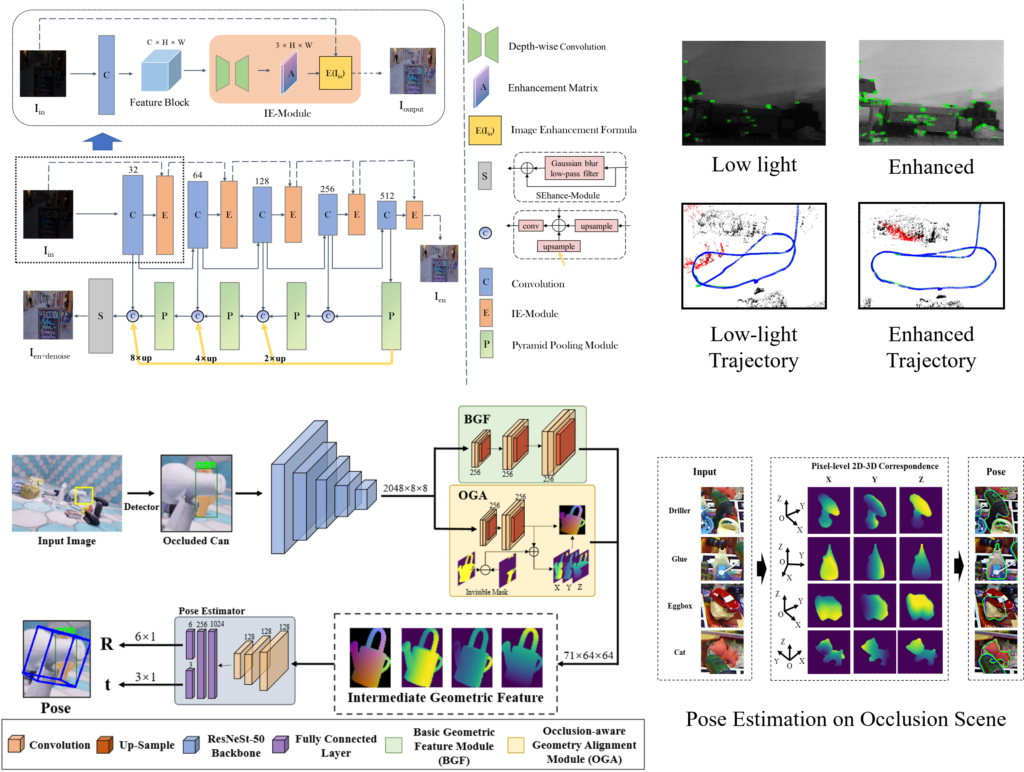

Robust visual machine learning algorithms in unstructured environments

When the safety monitoring robot is in the tunnel pipe gallery and other scenes, it may encounter the challenge of insufficient illumination and occluded objects. Insufficient illumination can cause the vision sensor to capture images with low brightness and noise. Occluded objects make the image contain only limited object information, thus reducing the accuracy of the object recognition algorithm. To solve these difficulties, an adaptive low-light enhancement algorithm and an occlusion-aware object pose estimation algorithm are proposed to deal with image degradation and object feature limitation, thereby providing robust data support for subsequent robot operations.

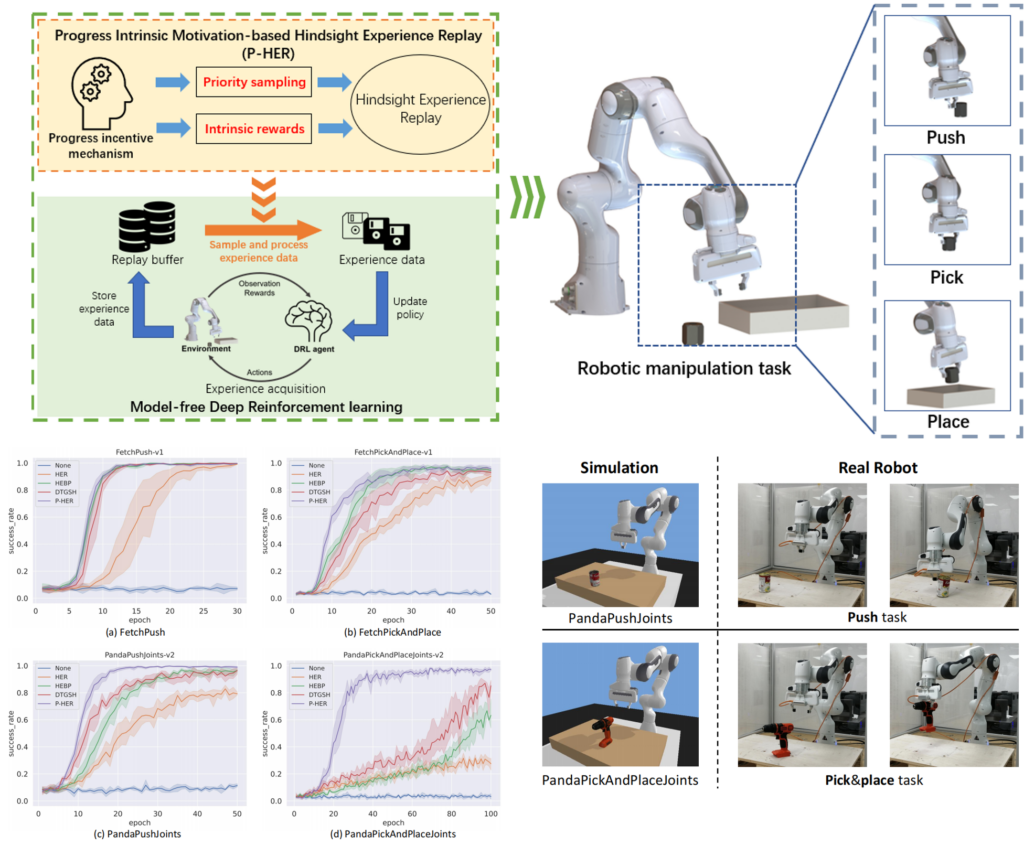

Progress Intrinsic Motivation-based reinforcement leraning for robotic safety monitoring tasks

Implementing robotic safety monitoring functions through manual teaching is labor-intensive and inefficient. In our work, the progress incentive mechanism in human learning from psychology is introduced to reinforcement learning, thereby overcoming the poor training performance caused by uniform sampling and scarcity of reward information. Without manual teaching, the robotic arm can achieve robust safety monitoring functions in industrial scenarios.

Research Projects

- Principal Investigator, Research and application of multi-modal sensing and data-driven intelligent process planning technology for industrial robot, Macao Science and Technology Development Fund Key R&D Project, MOP 6,000,000, 2020-2023.

- Principal Investigator, Research and Application for Intelligent Detection of Packaging Quality about Semiconductor Devices, Joint research project funded by the Ministry of Science and Technology and the Macao Science and Technology Development Fund, MOP 1,540,000, 2024-2026.

- Principal Investigator, Development of a Safety Guaranteed Surgical Navigation System Based on Globally Optimal Registration Algorithm, Macao Science and Technology Development Fund Project, MOP 2,060,000, 2023-2026.

- Principal Investigator, Research and application of 3D robotics vision based intelligent self-positioning manufacturing and compliance control, Guangdong Provincial Department of Science and Technology – Special Topic on the Transformation of Hong Kong and Macao Scientific and Technological Achievements, RMB 500,000, 2023-2024.

- Principal Investigator, Safety evaluation and maintenance management of key on board electromechanical equipment of Rail Transit in Great Bay Area, Guangdong-Guangzhou Joint Fund Guangdong-Hong Kong-Macao Research Team Project, RMB 2,000,000, 2020-2024.

- Principal Investigator, Integrated Elevator safety monitoring big data system and application in smart community, UM-Huafa Group Joint Lab Fund, RMB 3,170,000, 2022-2025.

- Principal Investigator, Key technologies and applications for robust production of welding equipment, Guangzhou International Science and Technology Cooperation Project, RMB 500,000, 2023-2025.

- Principal Investigator, Vertical traffic safety monitoring empowers the construction of smart communities, Zhuhai Science and Technology Innovation Bureau Hong Kong and Macao Cooperation Project, RMB 250,000, 2023-2025.